Structure of SASS

Multimodality Data Collection System

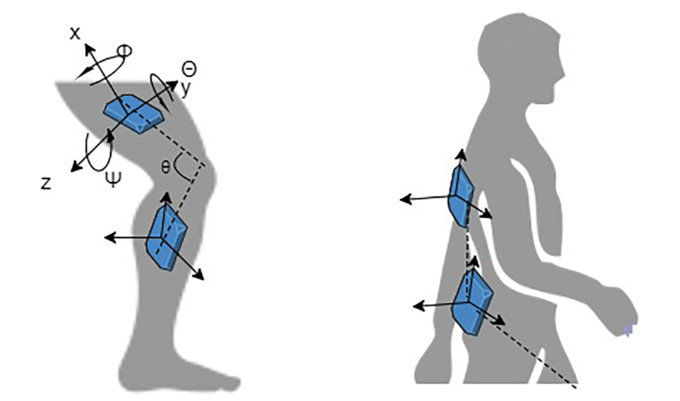

Build a robust and synchronized data collection system to gather information from multiple camera views and IMU sensors.

REID Feature Extraction

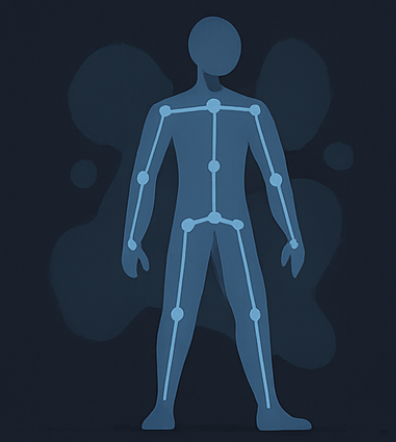

Extract useful features from the collected dataset, such as 2D/3D pose, gait characteristics, and IMU-based features.

Feature Evaluation & Correlation

Evaluate the effectiveness of different features for REID and tracking tasks, and analyze the correlations among these features.